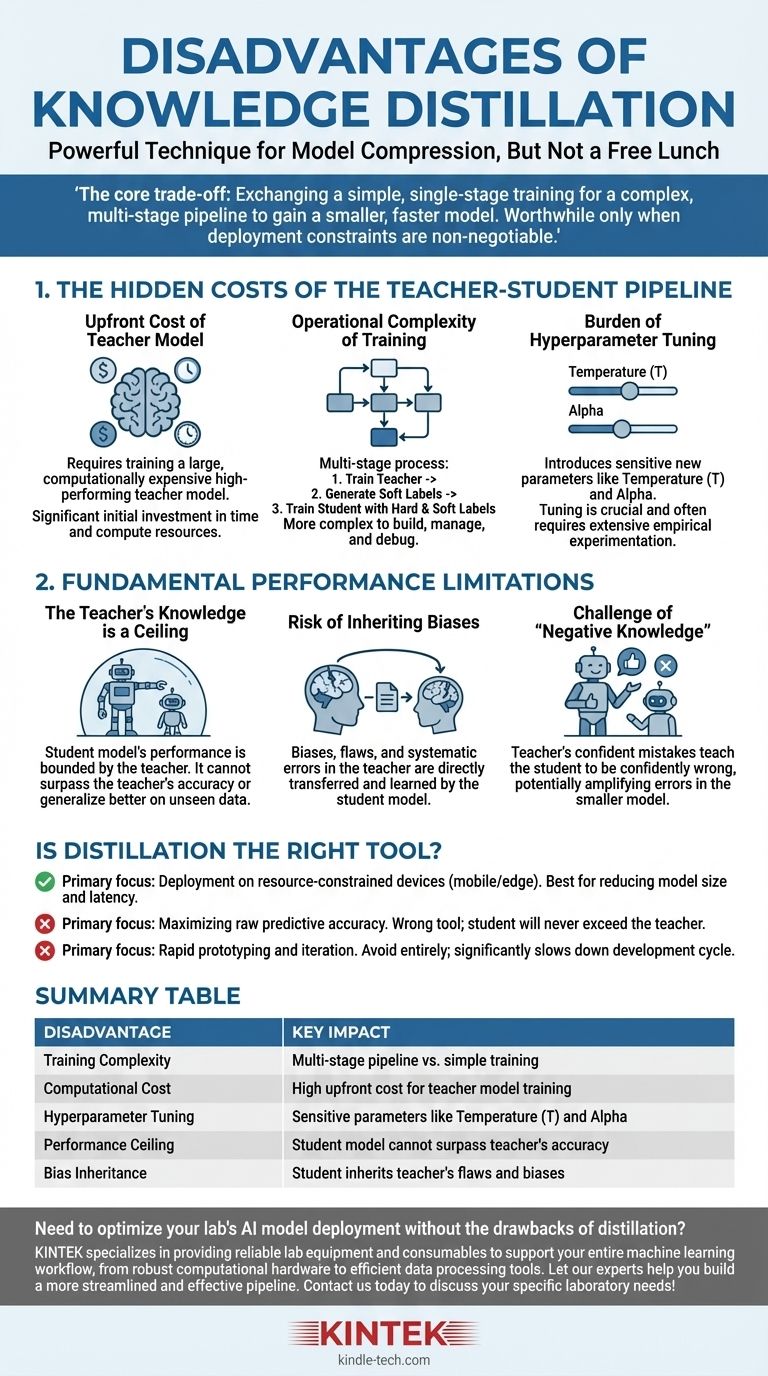

While knowledge distillation is a powerful technique for model compression, it is not a free lunch. The primary disadvantages are the significant increase in training complexity and computational cost, the introduction of sensitive new hyperparameters, and the hard performance ceiling imposed by the quality of the teacher model.

The core trade-off of distillation is clear: you are exchanging a simpler, single-stage training process for a complex, multi-stage pipeline to gain a smaller, faster model. This investment in complexity is only worthwhile when deployment constraints like latency or memory are non-negotiable.

The Hidden Costs of the Teacher-Student Pipeline

The most immediate drawbacks of distillation are not conceptual but practical. They involve the added time, resources, and engineering effort required to manage a more complex training workflow.

The Upfront Cost of the Teacher Model

Before you can even begin distillation, you need a high-performing teacher model. This model is, by design, large and computationally expensive to train.

This initial training phase represents a significant, non-trivial cost in both time and compute resources that must be paid before the "real" training of the student model can start.

The Operational Complexity of Training

Distillation is a multi-stage process, unlike standard model training. The typical workflow is:

- Train the large teacher model to convergence.

- Perform inference with the teacher model on your entire training dataset to generate the "soft labels" or logits.

- Train the smaller student model using both the original "hard labels" and the teacher's soft labels.

This pipeline is inherently more complex to build, manage, and debug than a standard training script.

The Burden of Hyperparameter Tuning

Distillation introduces unique hyperparameters that govern the knowledge transfer process, and they require careful tuning.

The most critical is temperature (T), a value used to soften the probability distribution of the teacher's outputs. A higher temperature reveals more nuanced information about the teacher's "reasoning," but finding the optimal value is an empirical process.

Another key hyperparameter is alpha, which balances the loss from the teacher's soft labels against the loss from the ground-truth hard labels. This balance is crucial for success and often requires extensive experimentation.

The Fundamental Performance Limitations

Beyond the practical costs, distillation has inherent limitations that cap the potential of the final student model.

The Teacher's Knowledge is a Ceiling

A student model's performance is fundamentally bounded by the knowledge of its teacher. The student learns to mimic the teacher's output distribution.

Therefore, the student cannot surpass the teacher in accuracy or generalize better on unseen data. It can only hope to become a highly efficient approximation of the teacher's capabilities.

The Risk of Inheriting Biases

Any biases, flaws, or systematic errors present in the teacher model will be directly transferred to and learned by the student model.

Distillation doesn't "clean" the knowledge; it simply transfers it. If the teacher has a bias against a certain demographic or a weakness in a specific data domain, the student will inherit that exact same weakness.

The Challenge of "Negative Knowledge"

If the teacher model is confidently wrong about a specific prediction, it will teach the student to be confidently wrong as well.

This is potentially more harmful than a model that is simply uncertain. The distillation process can amplify the teacher's mistakes, baking them into the smaller, more efficient model where they may be harder to detect.

Is Distillation the Right Tool for Your Goal?

Ultimately, the decision to use distillation depends entirely on your project's primary objective.

- If your primary focus is deploying on resource-constrained environments (like mobile or edge devices): Distillation is a leading technique to achieve the necessary reduction in model size and latency, assuming you can afford the upfront training complexity.

- If your primary focus is maximizing raw predictive accuracy: Distillation is the wrong tool. Your effort is better spent on training the best possible standalone model, as the student will never exceed the teacher's performance.

- If your primary focus is rapid prototyping and iteration: Avoid distillation entirely. The multi-stage pipeline and complex hyperparameter tuning will significantly slow down your development and experimentation cycle.

Understanding these disadvantages allows you to deploy knowledge distillation strategically, recognizing it as a specialized tool for optimization, not a universal method for improvement.

Summary Table:

| Disadvantage | Key Impact |

|---|---|

| Training Complexity | Multi-stage pipeline vs. simple training |

| Computational Cost | High upfront cost for teacher model training |

| Hyperparameter Tuning | Sensitive parameters like temperature (T) and alpha |

| Performance Ceiling | Student model cannot surpass teacher's accuracy |

| Bias Inheritance | Student inherits teacher's flaws and biases |

Need to optimize your lab's AI model deployment without the drawbacks of distillation? KINTEK specializes in providing reliable lab equipment and consumables to support your entire machine learning workflow, from robust computational hardware to efficient data processing tools. Let our experts help you build a more streamlined and effective pipeline. Contact us today to discuss your specific laboratory needs!

Visual Guide

Related Products

People Also Ask

- Why argon is used in magnetron sputtering? The Ideal Gas for Efficient Thin Film Deposition

- How does a high-precision oven contribute to the post-processing of hydrothermal oxidation products? Ensure Data Purity

- What are the disadvantages of sample preparation? Minimize Errors, Costs, and Delays in Your Lab

- What are the applications of optical thin film? Unlocking Precision Light Control

- How does the centrifuge process work? Unlock Rapid Separation for Your Lab

- What is the difference between electric furnace and electric arc furnace? A Guide to Industrial Heating Methods

- Why is an electric arc furnace better than a blast furnace? A guide to modern, sustainable steelmaking

- Does THC distillate lose potency? A guide to preserving your product's power.